Most of TMI’s clients have strong engineering capability and a legacy of advancing technical capability. Thus, they are “genetically incapable†of offering only a minimum compliance product. Their product offers are threshold plus and often, objective level. Despite RFPs stating “best value to government†as the basis for award, the offeror with lowest Total Evaluated Price (TEP) and threshold level performance often wins. Insights on how to win best value trade-off source selections are presented in this month’s Winning Insights post.

Imperative for Advances. While the need to counter threat continues, competition policy results in threshold capability equal to the lowest competing product to the detriment of mission capability. In recent years policy defines two categories of source selection strategy: (1) Low Price Technically Acceptable (LPTA) and (2) best value trade offs. Best value trade offs provide two alternatives: (1) subjective judgment and (2) Value Adjusted Total Evaluated Price (VATEP).

The collapse of the Soviet Union and acquisition reform shifted acquisitions from threat-driven, spec-based prescriptive RFPs to performance based RFPs. Wherein a few critical performance parameters (KPPs) are required. And, based on the claims of competitors, an objective beyond threshold is derived to define the performance vs. cost trade space (where capability trade offs could be valued analytically or subjectively).

Asymmetric Product Performance. Few (if any) “clean piece of paper†spec driven new starts and a risk adverse environment have resulted in leveraging NDI, OTS, and the contractor’s own product portfolio.  Thus, customer source selection (SS) is a choice among acceptable, but differing levels of capability.

So What? In the broader chemistry of source selection where competitors meet KPPs and the other thresholds, claiming and substantiating greater product performance prompts the “so what†question. That is: Nice to have?  Gold Plating? Further, it could prompt the perception of added risk and cost. In the SS process the operative questions become:

–  Does added performance make a demonstrable difference?

–  And is the difference worth the possible additional cost to the government?

Collaborating with select customer members to help further define/refine the questions and “monetize†the added value in the context of the mission is the capture challenge. In short, the challenge is to illustrate via mission analysis the difference that performance makes; get source selection “standing’ for added capability; sell the case that the difference is meaningful; and substantiate the value in the proposal.

Selling Additional Capability. The necessary conditions for selling additional capability are—

– It makes a demonstrable difference in mission outcome or provides capability not otherwise affordably available;

– The technical evaluation factor/subfactors give “standing†in source selection evaluation to value of added military utility; and

– It provides cost savings (cost effective operationally or logistically).

Among the sufficient conditions are–

– The contractor pre-sells and substantiates the value of added military utility in an accepted (by user and/or doctrine command) operational context;

– If added capability cost more, the customer has funds to pay for it; and

– The PCO, with concerns of a protest by LPTA competitor, does not stand in the way.

Note: during the 1990s and 2000s, more than70 % of best value (albeit most were subjective trade offs) went to the contractor with the lowest Most Probable Cost (MPC) to government. Wonder why? As Best Value SSs “play out†the LPTA “anchor†often becomes the easy answer.

Meaningful. To advance added performance from just “interesting†to meaningful, in the mind of the customer, requires the context of a CONOPS. In the early stages of an acquisition the CONOPS is the framework for requirements definition. As stated in CJCSI 3170 JCIDS:  “CONOPS provide the operational context to examine and validate capabilities required to solve a current or emerging problem.†CONOPs should cover: (1) problem addressed, (2) mission, (3) Commander’s intent, (4) operational overview, (5) functions/effects to be achieved, and (6) roles and responsibilities of affected organizations. Individual Services have specific command centers for guidance and formats, e.g., Naval Warfare Development, Army TRADOC, Marine Combat Development, and USAF Doctrine and Regional Commands.

Demonstrable Difference? CONOPS alone will not answer the central SS question. However, mission analysis (with applicable measures of effectiveness), in context of CONOPS, can answer it. Acceptance of the answer depends on CONOPS, modeling, and measures reflecting the input of the user, Requirements Officer, program sponsor, and customer mission analysis organizations. Sharing the results along the way and responding to the issues raised is the ultimate pre-sell process leading to validation and acceptance by the customer.

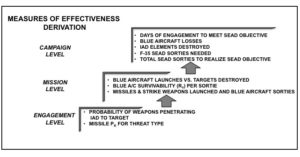

Measures of Effectiveness. Measures are derived for the specific mission, product performance effects, and level (engagement, mission, campaign) of analysis as shown in the strike weapon example below. Engagement level analysis illustrates product performance differences. When rolled up as input to mission level analysis, these result in the quantified demonstrable difference.

Strike Weapon Example

Raising the Stakes. If the engagement level results do not clearly monetize the added performance value enough to justify the choice, then escalate to the mission level where the stakes are higher. In the strike weapon example, results of added weapon launch range performance may not justify the added cost in engagement level analysis. However, moving up to the mission level brings cost leverage of launch platform survivability into play, wherein avoiding the air defense exposure and loss of a few launch platforms is a huge cost benefit.

Shaping SS Evaluation Criteria. The typical Section M (RFP) evaluation basis for award states: “… this acquisition will utilize a trade-off source selection IAW FAR 15.3 to make an integrated assessment for a best value award decision.†This is not specific enough to get evaluation “standing†for added capability. The necessary condition for added capability is a technical sub factor example that states:

“Evaluation credit will be given when an offeror’s product performance exceeds threshold to the extent that the proposal substantiates       the added military utility at the mission system level.â€

The sufficient condition is that customer has the additional funds (if needed) and the PCO state of mind isn’t burdened with the LPTA anchor. Â

Countering Perceptions. The burden of proof is with the contractor to discount and/or eliminate the perception that added performance equates to added cost and risk. The problem is: how?  Added cost doesn’t mean unaffordable. The key is to show VALUE gained for the added cost. Discounting risk is enabled by using IRAD or company investment to demo technology maturity. Additionally, “Miracle of the Month†demos during the pre-RFP phase and featuring a demonstrated history of controlling risk will further discount perception of risk.

The proposal alone, even with substantiation, cannot make the case. Shaping the SS strategy & RFP, cultivating user pull (for mission capability) and acquisition push (capability is affordable in low risk executable program) are musts.

TMI’s subject matter experts in CONOPS, mission analysis, and business case analysis are available to help define your plan to determine the demonstratable difference and answer the question of whether or not the added capability is worth it.